Introduction to AI and Prompt Engineering

Prompt engineering is an important part of getting better results from large language models. It helps these models give high-quality responses.

The main idea behind prompt engineering is to write prompts smartly so that the AI gives you the kind of answers you want. This improves how the model works and makes the experience better for users.

To create good prompts, it’s helpful to understand how AI models work and how they respond to different inputs.

Large language models are trained on a lot of data and try to guess what comes next based on your input. That’s why the way you write your prompt matters when it comes to the content the AI gives you.

By adding clear and detailed context to your prompt, you can make sure the AI gives more accurate and useful answers.

Understanding AI Models

- AI models, including large language models, are complex systems that require careful consideration of their capabilities and limitations.

- The model’s behaviour is influenced by the prompt design, and understanding this relationship is key to creating effective prompts.

- Generative models, in particular, rely on well-designed prompts to produce coherent and relevant text responses.

- The quality of model responses is directly tied to the quality of the insert prompt, making giving someone a cue engineering a vital step in the process.

- Many AI systems remember past interactions in chat-based formats without re-establishing context.

Specific Instructions

Giving clear instructions is very important in prompt engineering. It helps Large models give more accurate and relevant answers. When you clearly explain the task, the format you want, and any limits, the AI understands exactly what to do.

Detailed instructions remove confusion and lower the chances of mistakes, which leads to more acceptable results. For example, if you ask for an answer in an elevator pitch or bullet point, the AI knows how to format the response.

Being specific also helps the AI handle complex tasks better, so the content it generates matches what you asked for and fits your goal.

Response Format and Generative Models

The way you ask for the response format is an important part of prompt design. It helps shape how the AI will give its answer.

AI models can create many types of responses—from plain text to more complex formats like JSON.

To get the results you want, it's important to know what the model can and can't do. This helps you write better prompts.

Giving examples and adding context can make it easier for the AI to understand the task, which leads to better responses.

You can also use follow-up prompts to continue the conversation without repeating earlier details.

Designing Prompts for Text Generation

Creating prompts for text generation means you need to understand what the AI model can do and what the task requires.

Good prompts give clear instructions, helpful context, and enough details for the AI to produce high-quality answers.

Using specific formats—like XML tags—can help the model follow your instructions more accurately.

It’s also important to test and tweak your prompts multiple times. This helps improve the model’s performance and gets the best results.

Prompting Strategies for Effective Prompts

Using smart prompting strategies—like giving examples and context—can help the AI give better answers.

One useful method is few-shot learning, where you show the model a few examples to guide how it should respond.

Another helpful approach is a and retrieval-augmented cohort, where you add extra information or context to help the model create better answers.

Knowing the pros and cons of each strategy is important for writing strong prompts and getting the results you want.

Effective Prompt Design

To design good prompts, you need to give clear instructions, the right context, and enough details to guide how the AI responds.

Using formats like bullet points can make prompts easier to read and understand.

The more specific and detailed you are, the better the AI will understand the task and give accurate answers.

Testing and improving your prompts step by step is important for getting the best performance from the model.

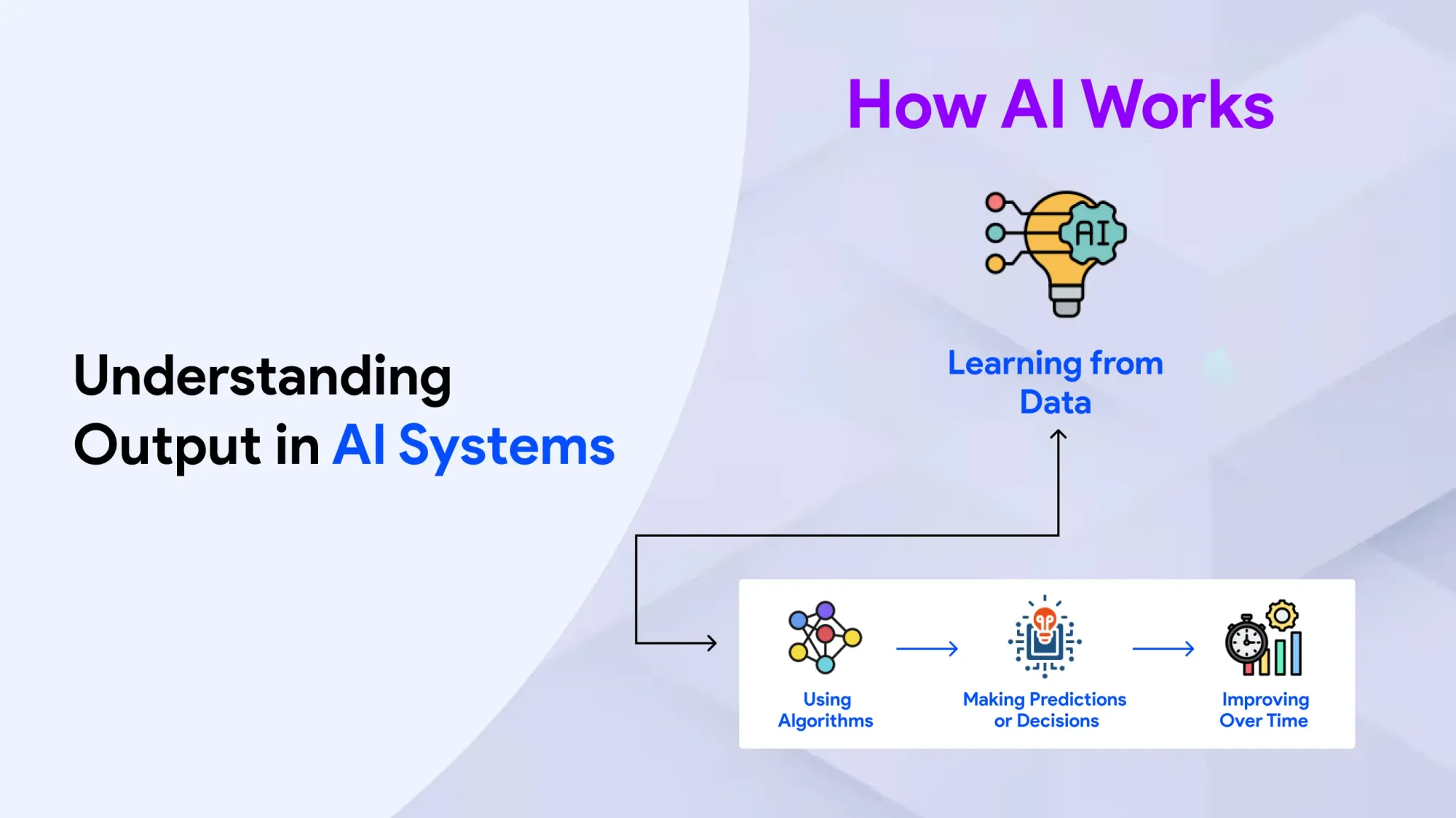

Understanding Output in AI Systems

In AI and huge language models, the word “output” means the text or data the model gives back after you enter a prompt. The quality and usefulness of that output depend a lot on how well the prompt is written. Good prompt engineering helps improve output by giving clear instructions and enough context.

The output can come in many formats—from plain text to more complex ones like JSON or XML. Telling the model which format you want helps make sure the response fits your needs. For example, you can ask for a bulleted list or a short pitch to make the result easier to read and use.

Improving the prompt step by step is important for getting better results. Testing and changing the prompt helps cut down on errors and makes the output match your goal more closely. Adding examples in the prompt, like in few-shot learning, can also guide the model to give more accurate answers.

In the end, knowing how to manage and understand the output is key to using AI tools well and getting great results in different tasks.

AI Model Fine-Tuning

Fine-tuning an AI model means making changes to its settings so it works better for a specific task or dataset.

This process can greatly improve the quality of the model’s responses and boost its overall performance.

Techniques like transfer learning and multi-task learning help make fine-tuning more efficient and effective.

Fine-tuning uses smaller, focused datasets to improve the abilities of a model that was already trained on a large amount of general data.

Knowing the pros and cons of different fine-tuning methods is important for getting more acceptable results.

Full fine-tuning updates all parts of the model, creating a new version with improved performance.

Parameter-efficient fine-tuning changes only a few parts of the model to save memory and make the process lighter.

Transfer learning lets a model trained on general topics learn to handle more specific tasks or domains.

Overall, fine-tuning is quicker and more affordable than training a new model from scratch because it builds on a model that’s already trained.

Full fine-tuning updates all of the model's weights, resulting in a brand-new version of the model.

Transfer learning allows a model trained on massive general-purpose datasets to adapt to a specialised domain.

Multi-task fine-tuning trains the AI model on datasets containing instructions for various tasks over multiple training cycles.

The Power of Example in Prompt Engineering

Examples are a powerful part of prompt engineering because they help the AI understand what kind of answer you’re looking for. When you include an example in your prompt, it becomes easier for the model to give the type of response you want.

Repeating examples in your instructions can make the AI understand even better. For instance, if you want a summary, showing an example summary helps the model match your preferred style and length. You can also use examples to explain how to complete harder tasks—this reduces mistakes and makes answers more accurate.

An example can be short, like one word, or long, like a full paragraph. For narrative writing prompts, showing both the input and the expected output helps the AI know what to do. This step-by-step method using examples is important for improving prompts and getting more acceptable results.

The clearer and more relevant your example is, the better the AI will perform. For instance, when fine-tuning a model, using examples that show exactly how to answer a question makes a big difference. That’s why it’s always a good idea to include examples in your prompts—they help the AI do its job better.

Generative AI and Prompt Engineering

Generative AI models, like huge language models, rely heavily on prompt engineering to produce good-quality responses.

The clearer and more detailed your prompt is, the better the model’s answer will be. That’s why prompt engineering is a key part of working with generative AI.

To write effective prompts, it’s important to know what these models can and cannot do.

Techniques like few-shot learning and retrieval-augmented generation help improve the accuracy and usefulness of responses.

Fine-tuning also plays a big role—it allows models to perform well using smaller, topic-specific datasets tailored to your needs.

Few-Shot Learning and Prompting

Few-shot learning means giving the AI a few examples to help it understand how to respond. This can make the model’s answers more accurate and useful.

It works especially well for tasks that need clear and specific responses.

To use few-shot learning effectively, it’s important to know what it does well and where it might fall short.

This method also helps reduce the need for large training datasets, making model development faster and more efficient.

Another helpful technique is instruction fine-tuning, where the model is trained using examples that clearly show how to answer different types of questions.

Explain the Importance of Clear Instructions in Prompt Engineering

Clear instructions are very important in prompt engineering because they tell the AI exactly what kind of response you want. When your instructions are specific and detailed, the model understands the task better, which helps avoid confusion and mistakes.

This makes the response more accurate and relevant, and it helps the model perform better overall. Also, when you explain the format you want—like asking for an elevator pitch or a bulleted list—it helps the AI give a response that closely matches your request and goal.

Clear instructions lead to better and more useful results.

Next Steps in Prompt Engineering

The next steps in prompt engineering involve continuing to improve how prompts are designed.

This includes trying out newer methods like few-shot learning and retrieval-augmented generation, and learning what each strategy does well and where it might fall short.

To write effective prompts, it’s important to understand what AI models can and can’t do. This helps you get more acceptable results.

Testing and adjusting your prompts over time is also key to improving how well the model performs.

Sequential fine-tuning is another useful method. It slowly trains the model on more specific tasks without making it forget what it has already learned.

When writing prompts, it’s important to clearly explain the task so the AI knows exactly what to do. Giving specific instructions for each task helps guide the model’s behaviour, which leads to more accurate and helpful answers. This also helps reduce mistakes, especially in complex tasks.

In giving someone a cue, engineering, and are clear context is just as important. The model works much better when it has enough information to understand the situation. Without good context, the results may be unclear or off-topic, showing how important it is to include the right background in your prompts.